Multimodal Deep Learning for Robust RGB-D Object Recognition

|

Abstract

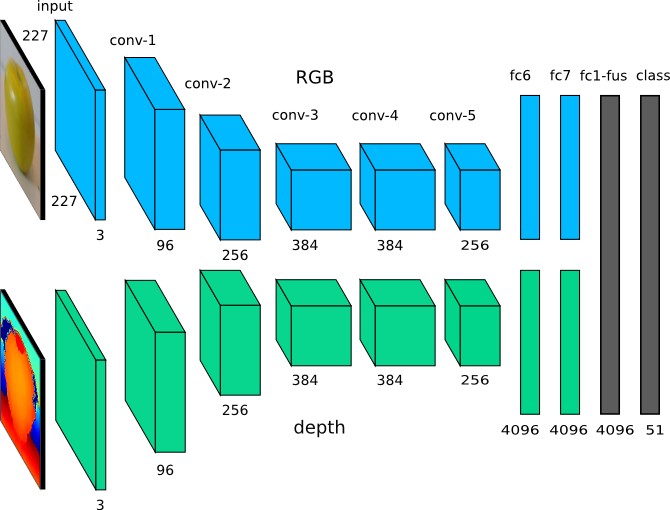

This paper leverages recent progress on Convolutional Neural Networks (CNNs) and proposes a novel RGB-D architecture for object recognition. Our architecture is composed of two separate CNN processing streams, one for each modality, which are consecutively combined with a late fusion network. We present state-of-the-art results on the UW RGB-D Object dataset. The package contains a modified Caffe version for training of multimodal fusion networks, ready trained networks and a demo to test our pre-trained model.Download

Code including caffe models and test demo.References

If you find this library useful in your research, please consider citing:Multimodal Deep Learning for Robust RGB-D Object Recognition

Andreas Eitel, Jost Tobias Springenberg, Luciano Spinello, Martin Riedmiller, Wolfram Burgard

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 2015

Download arXiv BibTeX

Terms of use

This program is provided for research purposes only. Any commercial use is prohibited. If you are interested in a commercial use, please contact the copyright holder. This program is distributed WITHOUT ANY WARRANTY.Version history

v.1 Initial version [correct] v01v.1.1 Updated caffemodel, slight change depth2color processing [this color processing didn't have the recognition performance from v.1 for the fusion network] v01.1

v.1.2 Back to depth2color processing as in v.1. code cleanup in create_fusion_images.py. Remove depth2image script because of difference to create_fusion_images.py