Markus Spies (Kuderer)

Research

Socially compliant robot navigation

Mobile robots that operate in a shared environment with humans need the ability to predict the movements of people to better plan their navigation actions. We developed a novel approach to predict the movements of pedestrians. Our method reasons about entire trajectories that arise from interactions between people in navigation tasks. It applies a maximum entropy learning method based on features that capture relevant aspects of the trajectories to determine the probability distribution that underlies human navigation behavior. Hence, our approach can be used by mobile robots to predict forthcoming interactions with pedestrians and thus react in a socially compliant way.

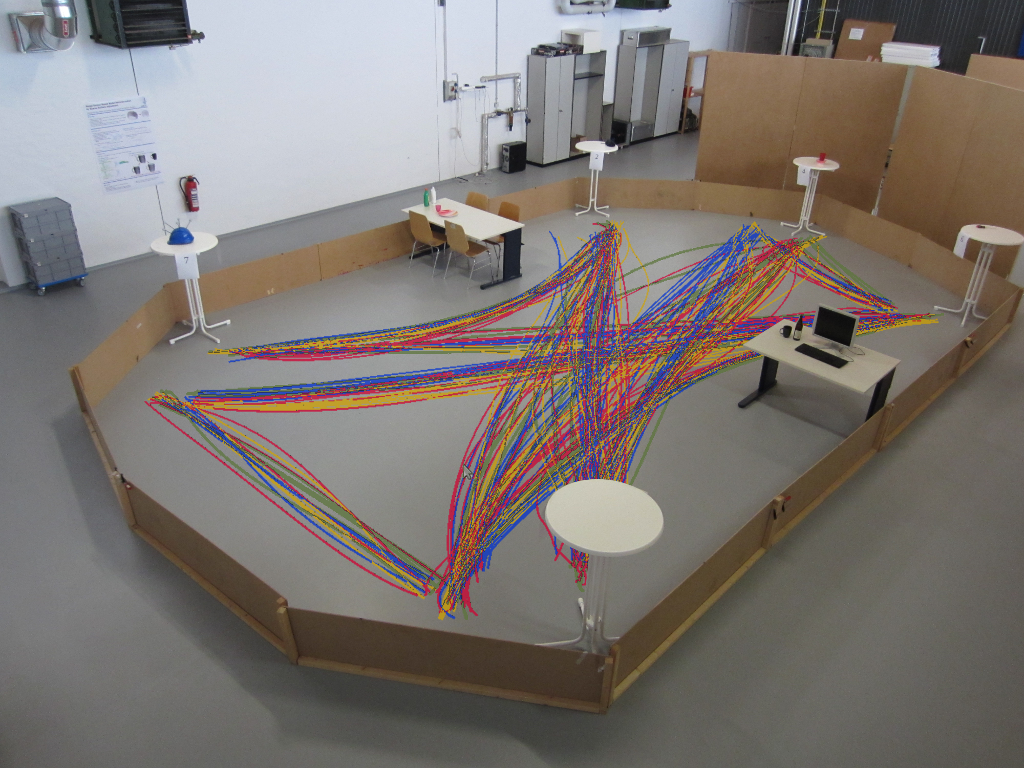

For our experiments we recorded natural navigation behavior of pedestrians in a motion capture studio. To distract the participants from the navigation task, we made them read and memorize newspaper articles at different locations that were consecutively numbered. At a signal, they simultaneously walked to the subsequent positions. We provide two different datasets of the interaction of three to four pedestrians.

|

Four pedestrians, environment with obstacles, used in the experiments of the paper Learning to Predict Trajectories of Cooperatively Navigating Agents. [Dataset] |

|

Three pedestrians, environment without obstacles, used in the experiments of the paper Feature-Based Prediction of Trajectories for Socially Compliant Navigation. [Dataset] |

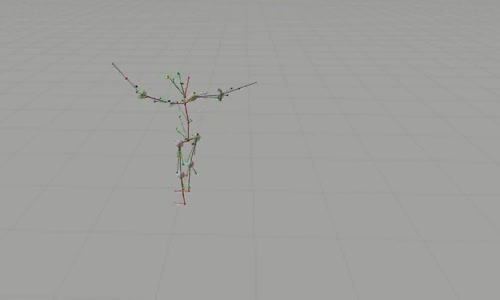

Skeleton Tracking

Methods to accurately capture the motion of humans in motion capture systems from optical markers are important for a large variety of applications including animation, interaction, orthopedics, and rehabilitation. Major challenges in this context are to associate the observed markers with skeleton segments, to track markers between consecutive frames, and to estimate the underlying skeleton configuration for each frame. Existing solutions to this problem often assume fully labeled markers, which usually requires labor-intensive manual labeling, especially when markers are temporally occluded during the movements.

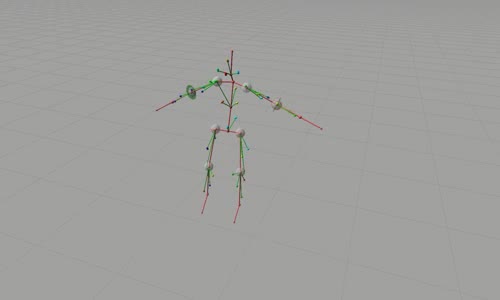

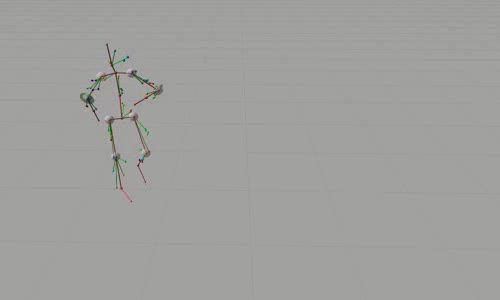

We developed a fully automated method to initialize and track the skeleton configuration of humans from optical motion capture data without the need of any user intervention. Our method applies a flexible T-pose-based initialization that works with a wide range of marker placements, robustly estimates the skeleton configuration through least-squares optimization, and exploits the skeleton structure for fully automatic marker labeling. Find more details on the skeleton tracking techniques in our paper Online Marker Labeling for Fully Automatic Skeleton Tracking in Optical Motion Capture

We tested our approach using the following datasets that we manually annotated to get ground truth labeling. For all datasets we provide a video showing the real time tracking performance of our approach. Furthermore, you can find the ground truth data for all datasets.

|

Dataset 1: Slow, simple movements, very few occlusions [Movie] [Marker lables] |

|

Dataset 2: Mostly slow movements, some running and squats [Movie] [Marker lables] |

|

Dataset 3: Walking, some jumping, marker occlusions during duck-walk [Movie] [Marker lables] |

|

Dataset 4: Walking, sitting on a chair, climbing on top of a chair and a table, jumping [Movie] [Marker lables] |

|

Dataset 5: Gymnastics, squats and jumping jacks [Movie] [Marker lables] |